Efficiency in Go: Divide and Conquer with Master Theorem

Hey there, tech enthusiasts! Today, we’re going to delve into a fascinating topic that will up your efficiency game in Go: the Master Theorem. If you’re just getting started with Go or you’re already a seasoned Gopher, understanding how to optimize algorithms using divide and conquer combined with the Master Theorem is a powerful skill. So, grab your favorite energy drink, and let’s dive into the world of algorithm efficiency!

Understanding Divide and Conquer

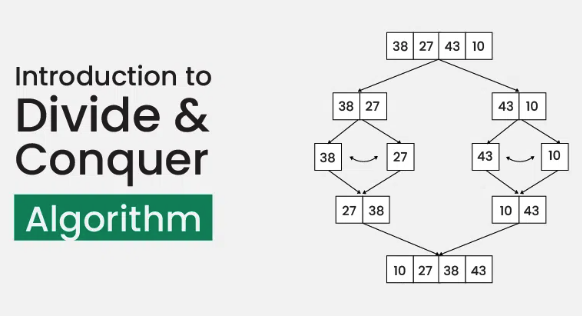

First things first, what exactly is divide and conquer? It’s a fundamental algorithm design paradigm based on multi-branched recursion. The idea is to break a problem down into smaller sub-problems, solve those sub-problems independently, and then combine their solutions to solve the original problem.

How Divide and Conquer Works

Here’s a quick breakdown:

- Divide: Split the original problem into smaller sub-problems.

- Conquer: Solve each sub-problem recursively.

- Combine: Merge the solutions of the sub-problems to get the solution for the original problem.

Examples of algorithms that use this approach include Merge Sort, Quick Sort, and Binary Search.

The Master Theorem

Now, let’s bring in the Master Theorem. This theorem provides a straightforward way to analyze the time complexity of recursive algorithms that follow the divide and conquer paradigm. The Master Theorem applies to recurrence relations of the form:

T(n) = aT(n/b) + f(n)Where:

- a: The number of sub-problems in the recursion.

- n/b: The size of each sub-problem, where n is the size of the problem.

- f(n): The cost outside the recursion, typically the work done to divide the problem and combine the results of sub-problems.

Master Theorem Cases

The Master Theorem has three cases to consider:

- If f(n) is O(nc) where c < logba, then T(n) is Θ(nlogba).

- If f(n) is Θ(nc) where c = logba, then T(n) is Θ(nclogn).

- If f(n) is Ω(nc) where c > logba, and af(n/b) ≤ kf(n) for some k < 1 and sufficiently large n, then T(n) is Θ(f(n)).

These cases provide a clear pathway to determining the time complexity of your algorithm, simplifying what can often feel like complex calculus into straightforward comparisons.

Applying Master Theorem in Go

Now, let’s see how we can apply the Master Theorem to a common divide and conquer problem: Merge Sort. We’ll walk through the implementation and then analyze its efficiency using the Master Theorem.

Merge Sort in Go

Here’s a simple implementation of Merge Sort in Go:

package main

import (

"fmt"

)

// Merge function that merges two sorted slices

func merge(left, right []int) []int {

result := []int{}

i, j := 0, 0

for i < len(left) && j < len(right) {

if left[i] < right[j] {

result = append(result, left[i])

i++

} else {

result = append(result, right[j])

j++

}

}

result = append(result, left[i:]...)

result = append(result, right[j:]...)

return result

}

// MergeSort function that sorts a slice using the merge sort algorithm

func mergeSort(arr []int) []int {

if len(arr) <= 1 {

return arr

}

mid := len(arr) / 2

left := mergeSort(arr[:mid])

right := mergeSort(arr[mid:])

return merge(left, right)

}

func main() {

arr := []int{38, 27, 43, 3, 9, 82, 10}

fmt.Println("Unsorted:", arr)

sorted := mergeSort(arr)

fmt.Println("Sorted:", sorted)

}In this code:

- The

mergeSortfunction divides the array into two halves until it can’t be divided anymore. - Then the

mergefunction combines these halves back together in sorted order.

Analyzing Merge Sort with Master Theorem

To analyze the time complexity of Merge Sort using the Master Theorem, we need to identify the parameters for its recurrence relation:

T(n) = 2T(n/2) + O(n)Here:

- a = 2 (since the array is divided into two halves)

- b = 2 (each half is of size n/2)

- f(n) = O(n) (the time complexity of the merge process)

Now, compare f(n) with nlogba:

log22 = 1, so nlog22 = nThis fits case 2 of the Master Theorem where f(n) is Θ(nc) and c = logba, thus:

T(n) = Θ(n log n)This means the time complexity of Merge Sort is Θ(n log n), which is pretty efficient for a sorting algorithm.

Conclusion

And there you have it! A closer look at how the Master Theorem simplifies the complexity analysis of divide and conquer algorithms, using Go as our trusty tool. By understanding and applying these concepts, you can write more efficient code, making your applications faster and more responsive.

Stay curious, keep coding, and remember: the more you learn about algorithms and their efficiencies, the more powerful your programming skills become. If you’re looking for further reading on the topic, check out this detailed Wikipedia article on divide-and-conquer algorithms.

Happy coding!