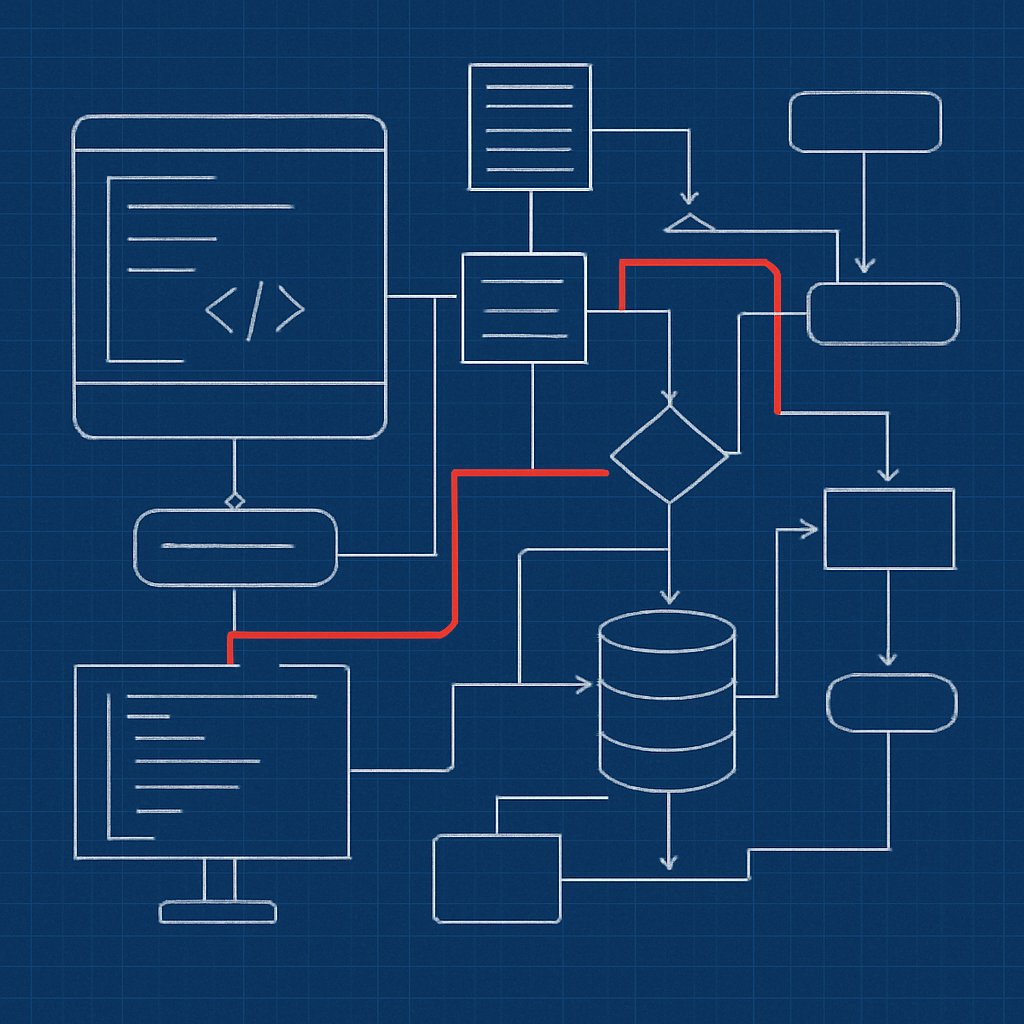

Claude Code can be transformative for development, but most users never unlock its full potential. The core shift: strictly separating planning from execution. This disciplined approach—rooted in explicit research, reviewable plans, and only then code generation—prevents costly mistakes, clarifies intent, and consistently yields stronger results. Here’s how to implement this workflow, why it works, and concrete steps to apply it to your engineering practice.

Key Takeaways:

- Disciplined planning with Claude Code prevents wasted effort and architecture drift

- Step-by-step workflow for separating research, planning, and implementation phases

- How to leverage Plan Mode for codebase analysis and reviewable, persistent plans

- Practical examples and directives for each phase—research, plan, annotate, execute, review

- Common pitfalls to avoid and how this approach compares to traditional prompt-based coding assistants

Why Separate Planning and Execution?

AI coding assistants are often used reactively: prompt, generate code, debug, repeat. This leads to shallow understanding and unpredictable results. The underlying problem isn’t the tool—it’s skipping the up-front thinking. As Boris Tane describes (source), the single most important principle is never let Claude write code until you’ve reviewed and approved a written plan.

- Deep research ensures Claude truly understands your system, not just surface-level function signatures.

- Persistent artifacts—like

research.mdand plan files—allow for review, correction, and sharing. - Reviewable plans mean you catch architectural issues before implementation, not after.

- Minimized rework: Token usage and dev cycles are spent refining plans, not rewriting broken code.

This mirrors senior engineering best practices: research, design, validate, execute. With AI tools, enforcing this workflow is essential to avoid implementing the wrong solution to the right problem. “Garbage in, garbage out” applies at the architecture level—if the research or plan is wrong, the code will be too.

The Claude Code Workflow in Practice

Boris Tane’s workflow and Plan Mode’s design (source) break down AI-assisted development into clear, disciplined phases. Here’s the structure:

| Phase | Action | Claude Role | Output Artifact |

|---|---|---|---|

| 1. Research | Direct Claude to read codebase in “great detail” and report findings | Read-only (no code changes) | research.md |

| 2. Plan | Draft an implementation plan—no code yet | Generate plan, not code | plan.md or spec file |

| 3. Annotate | Iterate, annotate, and refine the plan for edge cases and details | Refine plan, ask for clarifications | Annotated plan |

| 4. Approve | Manually review and approve the plan/spec | Wait for confirmation | Approved plan |

| 5. Execute | Direct Claude to implement exactly as specified | Write code, check types, complete all steps | Code changes |

| 6. Feedback & Iterate | Test, review, and iterate as needed | Refactor, fix, extend | Final code, updated docs |

Crucially, Claude is only allowed to generate code after the plan is locked. This discipline prevents the most expensive failure mode in AI-assisted coding: shipping the wrong solution due to misunderstandings or incomplete context.

Real-World Examples: Step by Step

Phase 1: Research (Context Loading)

To force Claude to deliver more than a cursory scan, use explicit and persistent prompts. For example:

read this folder in depth, understand how it works deeply, what it does and all its specificities. when that’s done, write a detailed report of your learnings and findings in research.md

Why it works: This directive makes “deeply” and “in great detail” non-negotiable, ensuring Claude creates a reviewable artifact. You can verify Claude’s understanding and correct errors before moving to the next phase.

Phase 2: Plan (No Code Yet!)

Once you’ve checked research.md for accuracy, instruct Claude to draft a step-by-step plan—still no code. Example:

now, based on your findings in research.md, write a detailed plan for implementing the new notification batching system. do not write any code; outline every step, decision point, and potential edge case.

Review this plan, annotate, and iterate until all requirements and edge cases are addressed.

Phase 3: Annotate & Approve

Add inline notes (for example, “add support for legacy client fallback here”) and have Claude respond or update the plan accordingly. Example annotated plan:

# Plan for Notification Batching (Annotated)

1. Analyze existing notification flows [done]

2. Identify all triggers for batching

3. Design new batching logic (include fallback for legacy clients)

4. Update API endpoints to support batch delivery

...

Only after you’ve confirmed every step is understood and agreed upon do you proceed to implementation.

Phase 4: Execute (Code Generation)

With the plan finalized, direct Claude to implement it exactly as written:

implement the above plan exactly as written. check types as you go. don’t stop until the plan is fully implemented.

This keeps execution aligned with your architectural intent and prevents scope drift.

Full Example Workflow

# Example: Adding a Feature with Claude Code

# 1. Research

read the scheduler module in depth, document all edge cases and current bugs in research.md

# 2. Plan

write a step-by-step plan for refactoring the scheduler to support cron expressions, based on research.md

# 3. Annotate

add notes on edge cases (e.g., daylight savings, leap seconds)

# 4. Approve

review, update, and sign off on plan.md

# 5. Execute

implement the plan as written, checking types at each stage

# 6. Feedback

test the new scheduler, log issues, iterate if necessary

Advanced Patterns and Team Collaboration

For teams or larger features, persist all research and plans as spec files in your repository. This enables:

- Peer review before any code is written

- Historical record of architectural decisions

- Faster onboarding for new contributors

- Reduced context loss and cognitive load

Claude Code’s Plan Mode, entered by pressing Shift + Tab twice (source), enforces this by locking the assistant into a read-only, planning-only state. No file changes, no code execution, no package installs—just analysis, plan, and reviewable output. This is especially valuable for fragile legacy code or projects requiring traceability.

Compared with “prompt-and-pray” workflows where code is generated immediately, this plan-first discipline leads to more robust, maintainable, and auditable software—especially as project complexity grows.

Common Pitfalls and Pro Tips

- Shallow prompts produce shallow results: Always require Claude to research “in great detail” and to write persistent reports (

research.md). - Skipping plan review leads to expensive fixes: The main risk isn’t syntax errors but misunderstood requirements. Never skip plan review—catch problems before you code.

- Iterate on plans, not code: Revising plans is cheap; refactoring code is not. Use annotation and review cycles in Plan Mode to catch issues early.

- Keep plans and specs distinct: In regulated or complex environments, treat the plan as a living specification. Commit it to your repo and enable peer feedback.

- Avoid context drift: Mixing planning and coding instructions in a single session can confuse the model. Keep phases separate and documented.

Alternatives and Context: Claude Code in the Broader Ecosystem

Claude Code’s Plan Mode is designed to enforce a senior engineering workflow—research, plan, approve, execute—reducing “ready, fire, aim” mistakes and ensuring each step is reviewable before implementation (source). This approach stands in contrast to more reactive prompt-based coding tools, which often lack explicit phases or persistent artifacts for review.

Other AI coding assistants, such as GitHub Copilot and various open-source LLM agents, may offer more flexible integration or faster code generation for simple tasks, but often do not provide the same rigor around planning and review. The right tool depends on your project’s complexity, regulatory needs, and workflow preferences.

For more on how disciplined processes impact engineering outcomes, see Boris Tane’s workflow breakdown.

Conclusion and Next Steps

To consistently achieve high-quality results with Claude Code, strictly enforce the separation of research, planning, and execution. Require written research artifacts, review and annotate plans, and only generate code once the design is locked in. This mirrors senior engineering habits and is the best way to harness advanced AI tools for reliable, maintainable software.

Start by trying Plan Mode in your next feature or refactor—use Shift + Tab twice to activate—and benchmark your results against traditional prompt-driven workflows. For further details on effective technical documentation and engineering patterns, refer to the original sources: How I Use Claude Code and Mastering Claude Code Plan Mode.