Enterprise-Ready RAG: Step-by-Step Implementation with Code, Cost, and Performance Insights

If your organization needs to surface proprietary knowledge with minimal hallucinations, robust data security, and predictable costs, Retrieval-Augmented Generation (RAG) is the leading approach. This guide walks you through building a production-grade RAG pipeline for enterprise knowledge bases, from architecture and chunking to vector DB selection, evaluation, and operational pitfalls. All recommendations are grounded in research-backed best practices (source, source).

Key Takeaways:

- Deploy a production-ready RAG pipeline for enterprise knowledge with research-backed techniques

- Apply chunking strategies that maximize retrieval accuracy and LLM effectiveness

- Compare top vector databases on deployment, cost, and scalability

- Use evaluation metrics tuned to RAG’s unique strengths and weaknesses

- Avoid operational pitfalls: chunking errors, hallucination, embedding drift, and cost overruns

Prerequisites

- Python 3.10+ installed

- Access to a commercial or open-source LLM API

- Familiarity with Python, REST APIs, and Docker

- Enterprise documents (PDFs, HTML, DOCX) you have permission to process

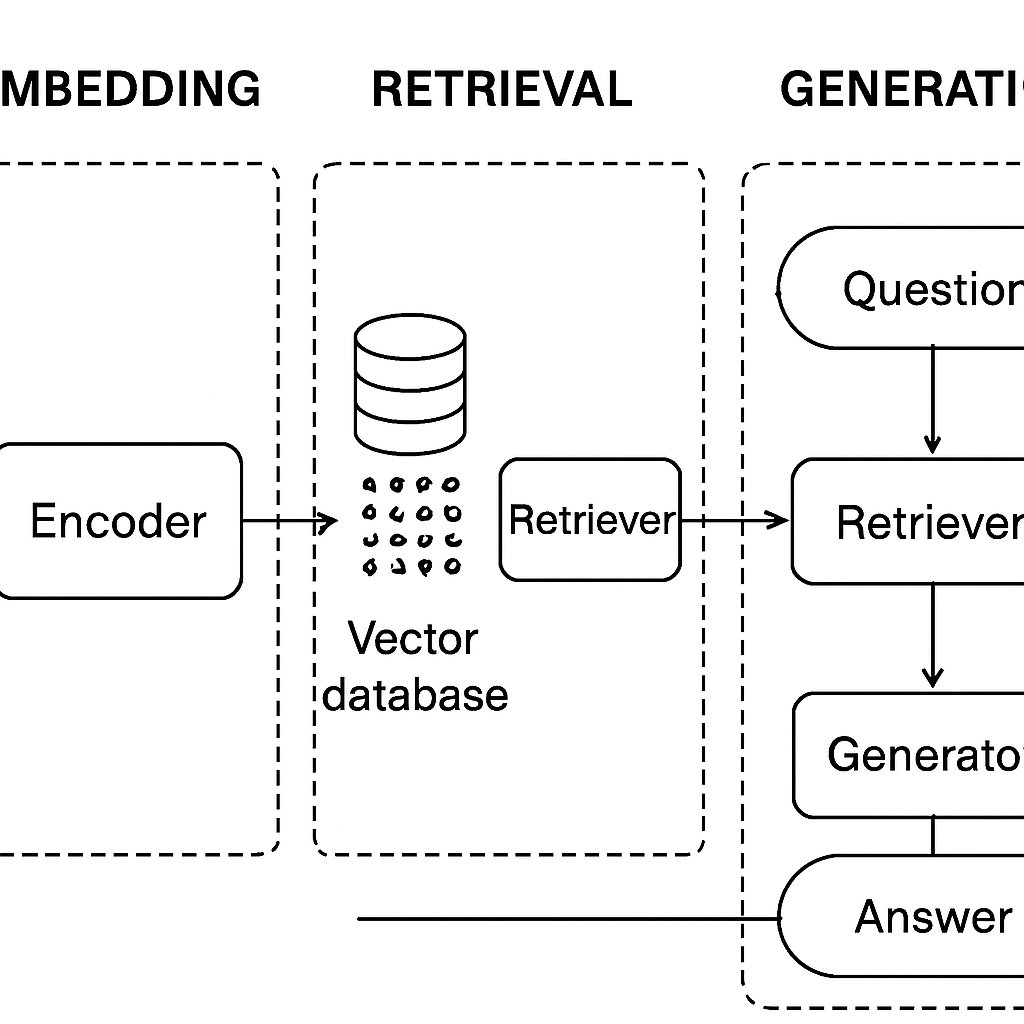

RAG Architecture: Embedding, Retrieval, and Generation

RAG bridges language models and real-world knowledge by combining:

- Embedding: Transform text content and user queries into semantic vectors using embedding models

- Retrieval: Search a vector database to find the most relevant content chunks for each query

- Generation: Feed retrieved information and the original query to the language model for answer synthesis

Detailed Workflow

- Preprocessing: Extract text from files (PDFs, HTML, DOCX) using libraries like

pdfplumber,PyMuPDF,BeautifulSoup, ortrafilatura(source). - Chunking: Split extracted text into manageable pieces (see next section).

- Embedding: Embed chunks using open models (e.g.,

SentenceTransformers) or cloud APIs, depending on compliance needs. - Indexing: Store vectors and metadata in a vector database (e.g., FAISS, Chroma, Weaviate, Pinecone).

- Query Flow: Embed user question → retrieve top-k chunks via similarity search → build prompt with retrieved context → send to LLM for answer.

Why this matters: RAG enables you to inject up-to-date and proprietary content into LLM answers, reducing hallucinations and improving factual accuracy (source).

Chunking Strategies for Enterprise Documents

Chunking is foundational to RAG retrieval quality. The way you split documents directly impacts answer relevance, context length, and performance (source).

Common Chunking Approaches

- Fixed-size window: Split text by a set number of tokens (e.g., 256–512), often with overlap to preserve context.

- Section-aware: Split on document structure (headings, FAQ pairs, paragraphs) for more semantically coherent chunks.

- Adaptive: Use sentence boundaries or NLP techniques to avoid breaking concepts mid-way.

Chunking Best Practices

- Keep chunk size within the LLM’s context window (often <2,000 tokens per chunk for GPT-3.5 class models).

- Use overlap (e.g., 30–50 tokens) to avoid loss of context across chunk boundaries.

- Store metadata (e.g., source, page number, section) with each chunk for traceability and filtering.

Why this matters: Poor chunking can lead to missing key information or retrieving irrelevant passages, which undermines answer accuracy.

Vector Database Selection: Cost, Scale, and Ecosystem

Your choice of vector database shapes retrieval latency, ops overhead, and cost profile. Here’s a comparison of the most commonly used vector DBs in RAG pipelines (source):

| Database | Deployment | Scale | Key Features | Cost |

|---|---|---|---|---|

| Pinecone | Managed cloud | Billions of vectors | Real-time retrieval, horizontal scaling, hybrid search, RBAC | Usage-based (starts around $70/month for dev workloads) |

| Weaviate | Managed/cloud/on-prem | Millions to billions | Hybrid search, multi-tenancy, GraphQL API | Free for small cloud, enterprise pricing by quote |

| Chroma | Local/cloud | Up to millions (RAM-limited) | Python-native, open-source, easy setup | Free (open source) |

| FAISS | Local only | Millions (RAM-limited) | Very fast, no network, DIY ops | Free (open source) |

Selection Criteria:

- Compliance: FAISS or Weaviate (on-prem) for strict data residency; Pinecone or Weaviate (cloud) for managed operations.

- Scale: Pinecone and Weaviate handle >10M documents; Chroma/FAISS are best for small to medium-sized KBs.

- API Ecosystem: Pinecone and Weaviate offer strong Python and REST support.

Evaluating RAG: Precision, Factuality, and Latency

RAG evaluation must address both retrieval relevance and generation factuality (source).

Key Metrics

- Retrieval Precision/Recall@k: Percentage of relevant chunks among top-k retrieved items.

- Context Relevance: Whether retrieved chunks actually address the user’s query.

- Answer Factuality: Whether the LLM’s response is grounded in the retrieved content, not hallucinated.

- Latency: Total time from query to answer (retrieval plus LLM).

- Cost per Query: Sum of embedding, vector DB, and LLM inference costs.

Human review is important for answer factuality—automated metrics (ROUGE, BLEU, BERTScore) can supplement but not replace manual checks.

RAG Implementation: Example Workflow

The following workflow outlines a typical RAG implementation using Chroma (local), SentenceTransformers for embedding, and a commercial LLM API. Adjust for Pinecone or Weaviate by modifying the database integration step.

# Preprocess and Chunk Documents

# (Extract text using pdfplumber, PyMuPDF, or BeautifulSoup as appropriate)

from sentence_transformers import SentenceTransformer

import chromadb

# Example: Prepare your text chunks (replace with actual extraction logic)

chunks = [

{"text": "Company policy on remote work...", "metadata": {"source": "policy1.pdf", "section": "Remote Work"}},

{"text": "Security protocol for data transfer...", "metadata": {"source": "policy2.pdf", "section": "Security"}},

# ... more chunks

]

# Embed Chunks

embedder = SentenceTransformer('all-MiniLM-L6-v2')

chunk_texts = [chunk["text"] for chunk in chunks]

chunk_vectors = embedder.encode(chunk_texts)

# Store in ChromaDB

client = chromadb.Client()

collection = client.create_collection("company_kb")

for chunk, vector in zip(chunks, chunk_vectors):

collection.add(

embeddings=[vector],

documents=[chunk["text"]],

metadatas=[chunk["metadata"]]

)

# Query Time: Embed the user question and retrieve top-k relevant chunks

user_query = "What is the company's remote work security policy?"

query_vector = embedder.encode([user_query])[0]

results = collection.query(

query_embeddings=[query_vector],

n_results=3

)

context = " ".join([doc for doc in results['documents'][0]])

# Construct prompt and call LLM API (pseudocode - use your LLM provider's SDK)

prompt = f"Context: {context}\n\nQuestion: {user_query}\nAnswer:"

# Send prompt to LLM and return answer

# (Refer to your LLM provider's official documentation for exact API usage)

This pattern enables you to ground LLM responses in enterprise-approved content, improving answer accuracy and transparency.

Cost and Latency Analysis

RAG system costs break down into three main areas: embedding, vector DB, and LLM inference (source).

| Component | Example Provider | Cost | Typical Latency | Notes |

|---|---|---|---|---|

| Embedding | OpenAI ada-002 | $0.0001/1K tokens | 100–600ms (API) | Batching lowers cost |

| Vector DB | Pinecone S1 | $70+/month (dev) | 10–100ms/query | Managed, scales with usage |

| LLM Inference | OpenAI GPT-3.5 | $0.002/1K tokens | 700–1500ms | Model choice impacts cost |

Build vs Buy Analysis

- FAISS/Chroma: Minimal infrastructure cost, but self-managed and RAM-limited.

- Pinecone/Weaviate: Managed, high scale, but incurs recurring cost and potential data egress fees.

- Embedding: Local models are free, but may be slower or less accurate than top cloud APIs.

- LLM: Pay-per-token model; costs scale with usage and context window.

For a typical use case (10,000 queries/month, 500 tokens/query):

- Embedding: negligible after initial indexing

- Vector DB: $70+/month (managed) or free (local)

- LLM: ~$10/month (OpenAI GPT-3.5)

- Total: $80+/month for managed; near-zero for local (excluding ops effort)

Common Pitfalls and Pro Tips

- Chunk size errors: Oversized chunks lead to irrelevant retrievals; undersized chunks fragment context. Tune sizes with real query tests.

- Forgetting metadata: Always store chunk source and section for traceability and compliance.

- Embedding drift: Changing embedding models invalidates stored vectors—re-embed your corpus if you switch models.

- Unmanaged costs: LLM costs can spike with large prompts—limit chunk count and context size.

- Latency: Batch embeddings and use local vector DBs for low-latency use cases.

- Compliance: Never send sensitive data to external APIs without contractual safeguards.

Conclusion & Next Steps

This guide delivers a practical blueprint for implementing enterprise-grade RAG: robust architecture, careful chunking, vector DB trade-offs, and cost controls. To go deeper into advanced orchestration and scaling, see our production RAG stack walkthrough. For further technical reading, reference the LangChain retriever docs and this advanced RAG methods overview.