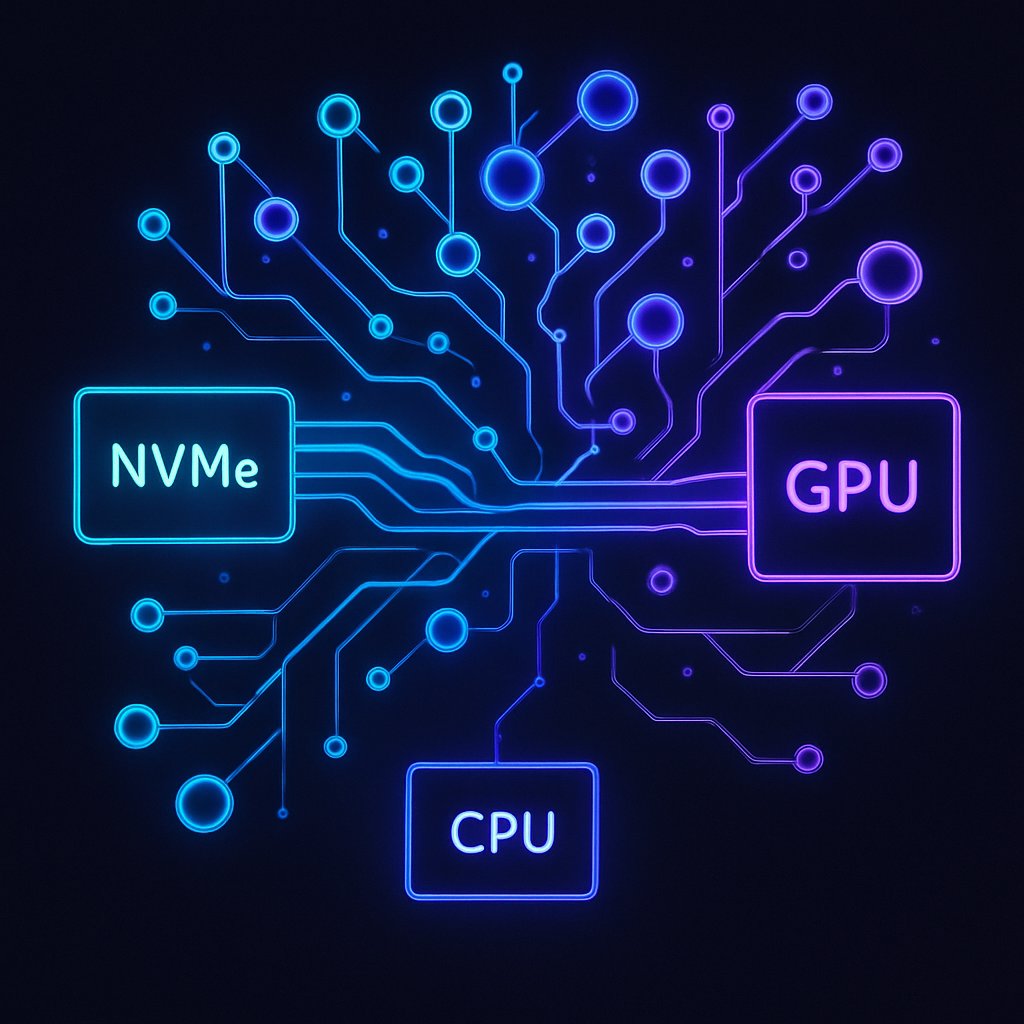

Learn how to run Llama 3.1 70B on an RTX 3090 using NVMe-to-GPU technology, bypassing the CPU for efficient local AI inference.

Learn how to run Llama 3.1 70B on an RTX 3090 using NVMe-to-GPU technology, bypassing the CPU for efficient local AI inference.

ggml.ai’s partnership with Hugging Face marks a pivotal moment for local AI development, enhancing sustainability and community support.