Here's a question for you: Could AI be as good as, or better, than humans at image recognition, especially when it comes to nude images? How does the classification of NSFW images relate to cloud storage? Before we can answer them reasonably, let's look at the bigger picture.

Often, you can come across them without consent or have your nude images shared without consent. This is why it's essential to have a classifier tool to identify and flag nude or NSFW images effectively.

Prereq: Basics of Image Recognition

Image recognition refers to identifying and detecting objects, people, text, and other features within an image. This technology can be used for various purposes, such as security systems, self-driving cars, and image search engines. It uses machine learning algorithms for image analysis and extracts information from them. The process of image recognition is complex and involves several steps. Image preprocessing, feature extraction, and classification.

Key Parameters of Nude Images Classification

Identifying key parameters for nude images can be challenging, as it involves balancing the need to accurately identify nudity with respect for privacy and avoid false positives. Some critical parameters for a nude images algorithm, by ChatGPT include:

- Pixel analysis: This can include analyzing the color, texture, and pattern of pixels in an image to determine if it contains nudity.

- Machine learning: Using ML algorithms and models to train the system to identify nudity in images and improve its accuracy over time.

- False positive rate: The rate of images incorrectly flagged as containing nudity should be low to maintain the system’s reliability.

How to Program Image Recognition for Nude Images without Machine Learning

So, that's enough about how it works. Now, let's look at how you can write a Python program to do it. But first, what are the things you need to make it work? Let's see below.

Step 1: Import necessary libraries for image recognition

Requirements

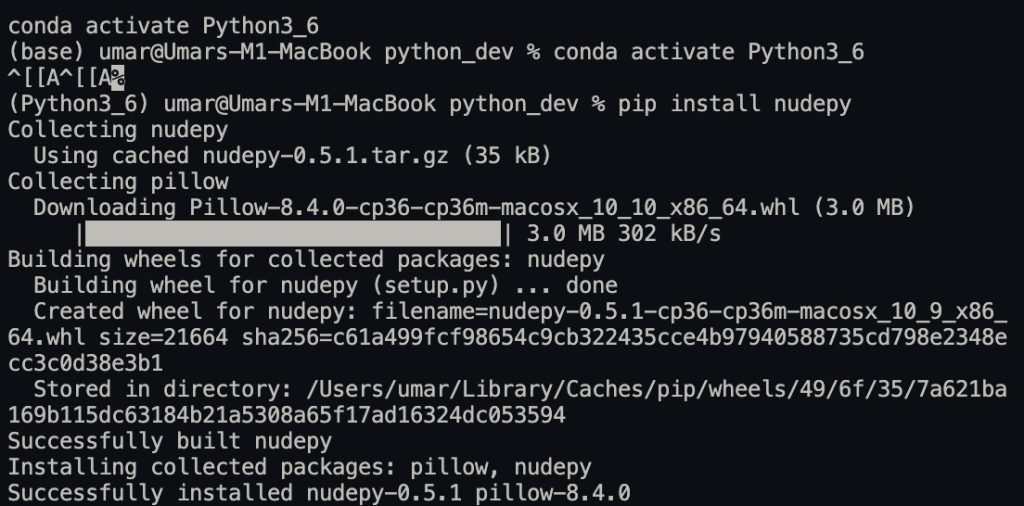

- Python 3.6-- install a virtual env if you don't have it.

- Cython

- Pillow-- installs with the Nudepy library.

- IDE-- I used VS Code to perform this section.

Note that the older versions of Python are also compatible, but I listed the specs I used.

After that, install it with pip:

$ pip install --upgrade nudepy

Then, the next step is to import it into a program:

import nudeStep 2: Running The Image Recognition for Nude Images Script

You only need a few lines, if that, for the program to work.

The library has a function that checks whether the picture you added has nudity. It's called is_nude.

import nude

from nude import Nude

print(nude.is_nude('.'))

n = Nude('./nude.rb/spec/images/damita.jpg')

n.parse()

print(":", n.result, n.inspect())Does NudePy Recognize Nude Images or Not...

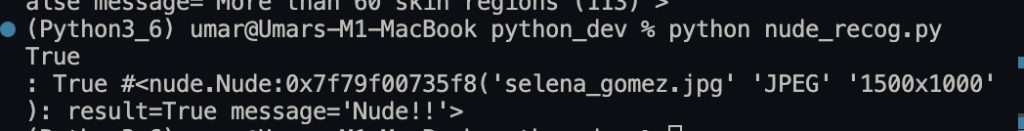

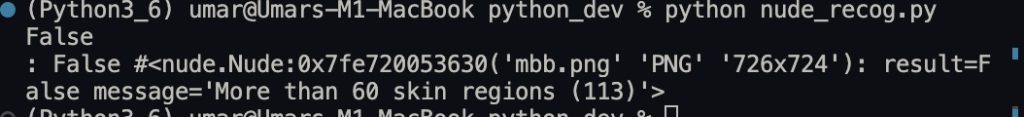

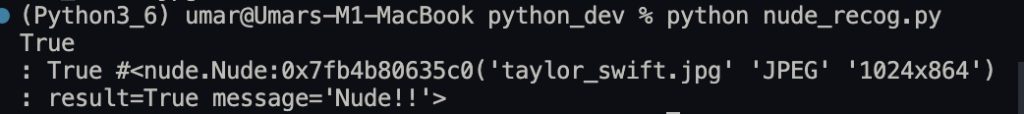

I used three sample images for this test program. Click on the names to access the images I used: Taylor Swift, Selena Gomez and Millie Bobby Brown. None of them were nudes. But as you can see, the false positive rate is high-- 66.67%.

This proves that it is not a good test for nude or NSFW images. Let's try to understand why and how we can develop a better solution.

Why Do You Need Machine Learning for Classifying Nude Images?

Okay, you have written a complex algorithm that detects a particular nude image or maybe even a specific type of nude--- what about others? How can you account for the countless other types of nudes? I mean, it's not like the Superbowl that only happens once a year, right? So yeah, no Superbowl. Need to make predictions on nude images countless times.

Machine learning is used because it allows for the efficient and accurate detection of nude images. It is a way to automate identifying nudity, which can be difficult for humans to do consistently and at scale.

What do we do then? Hm? Well, you can train a model to recognize nudity using ML on a dataset of images containing nudity and non-nudity to train this model. The model is then able to identify patterns and features that are characteristic of nudity, such as skin tone, body shape, and context.

So, is it really a feasible solution to identify nude images?

Using machine learning to recognize nudity has several advantages. It allows for the efficient and accurate detection of nudity. This can be helpful in contexts such as content moderation on social media platforms, cloud storage services, and filtering out inappropriate media content such as nude or NSFW images. Also, ML models can learn and adapt over time, so they can become more accurate as more data is fed into them.

However, it's vital to note that using ML learning to recognize nude images raises ethical concerns, such as bias, invasion of privacy, and inaccuracies.

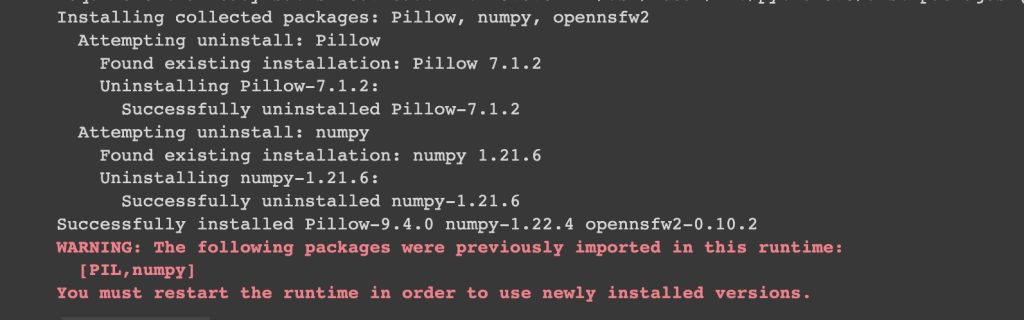

Code with OpenNsfw2

In this step, let's try to work with a model that is powered by machine learning or ML. Opennsfw2 is Yahoo's open source model based on TensorFlow 2 implementation.

Libraries:

- Pillow:

- Numpy

- Opennsfw2

Before you get started, install the NSFW images identifier library with this:

pip install opennsfw2

As you can see, pillow and numpy were installed as a by-product of this command.

Okay... How Do I Use It For Recognition of Nude Images:

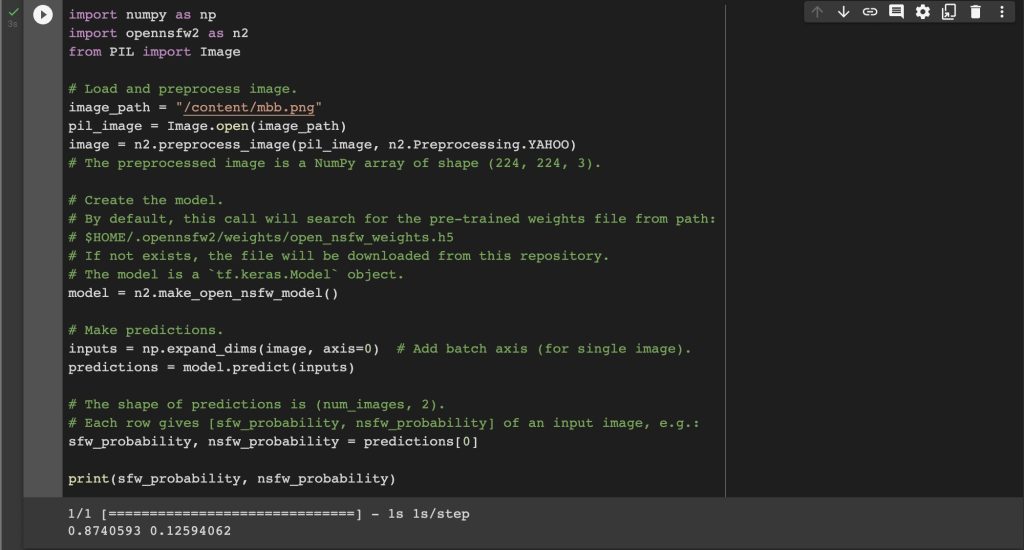

Here is the code below in Python to predict if your dataset includes nude images:

import numpy as np

import opennsfw2 as n2

from PIL import Image

# Load and preprocess image.

image_path = "image_path"

pil_image = Image.open(image_path)

image = n2.preprocess_image(pil_image, n2.Preprocessing.YAHOO)

# The preprocessed image is a NumPy array of shape (224, 224, 3).

# Create the model.

# By default, this call will search for the pre-trained weights file from path:

# $HOME/.opennsfw2/weights/open_nsfw_weights.h5

# If not exists, the file will be downloaded from this repository.

# The model is a `tf.keras.Model` object.

model = n2.make_open_nsfw_model()

# Make predictions.

inputs = np.expand_dims(image, axis=0) # Add batch axis (for single image).

predictions = model.predict(inputs)

# The shape of predictions is (num_images, 2).

# Each row gives [sfw_probability, nsfw_probability] of an input image, e.g.:

sfw_probability, nsfw_probability = predictions[0]

print(sfw_probability, nsfw_probability)The comments in the code help with understanding what is going on. After importing the libraries, you must load an image that is preprocessed into a numpy array. Then, a model is used, which is imported if not present in your directory. After that, the real work you are looking for is done. The not-safe-for-work image prediction part. Is the image recognition accurate or not?

This is seen in the output SFW and NSFW probabilities. The sum of both must equal 1.

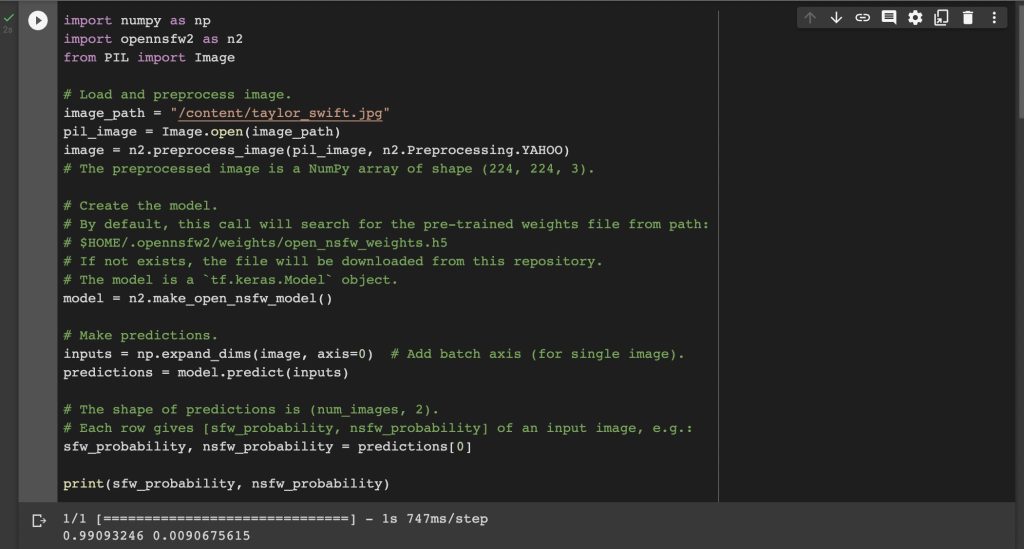

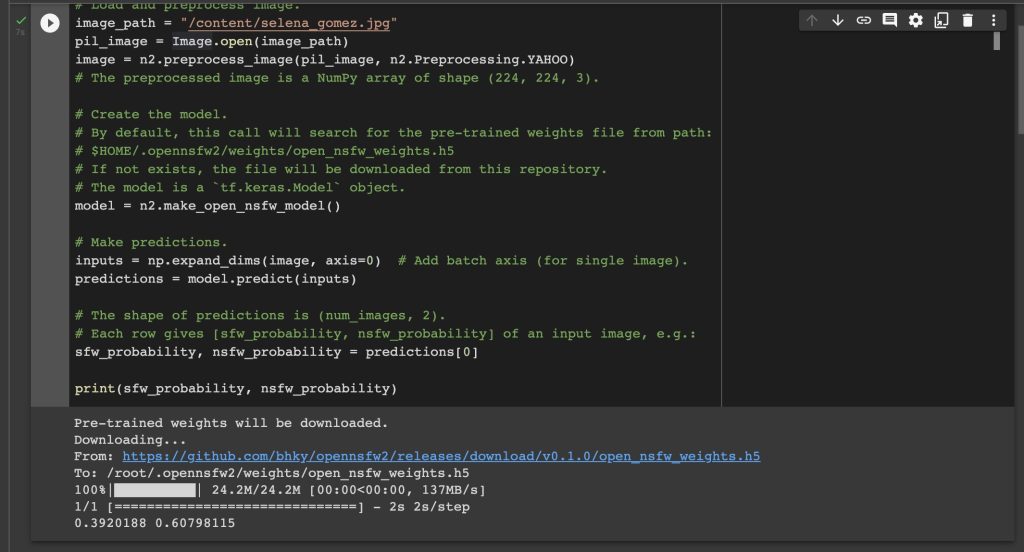

This model uses computer vision to define the probabilities of NSFW and SFW images in recognition. The following images show the results based on images from Taylor Swift, Selena Gomez, and Millie Bobby Brown.

Output:

As you can tell, the results still represent one false positive output. Even though none of the images are actually nude images, it still represents the result of one image as ~60% NSFW. But there are positives to take from this result, no pun intended.

- The probability of NSFW is not sufficiently high, and maybe a benchmark can be used to filter out false positives.

- The false positive rate has reduced by 1/3rd.

What's more, you can also use the same library for image recognition in each frame of a video. It will define the time and NSFW probability of the image frame recognized.

import opennsfw2 as n2

# The video can be in any format supported by OpenCV.

video_path = "path/to/your/video.mp4"

# Return two lists giving the elapsed time in seconds and the NSFW probability of each frame.

elapsed_seconds, nsfw_probabilities = n2.predict_video_frames(video_path)But Wait... There's More...

Finally, there's also another library that can perform nude image recognition. Not only that, but this library can also define it as probabilities between distinct categories. Namely, drawing, hentai, neutral, porn, and sexy are the five types.

But what is this library, you must be wondering... right? Well, it's NSFW Detector.

Importing Libraries

Requirements:

- Python 3

- Tensorflow V2 -- it is installed with NSFW Detector if you don't have it.

- IDE -- I used Google Collab for this part. Mainly because using Tensorflow is not so simple on the M1 Macs.

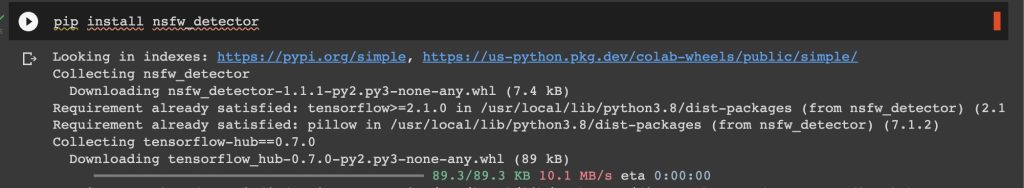

Use this command to install the library in your coding environment or directory.

After that, simply use this line to import it into your program:

import nsfw_dertectorHow Do I Use This Thing For Image Recognition, Mom?

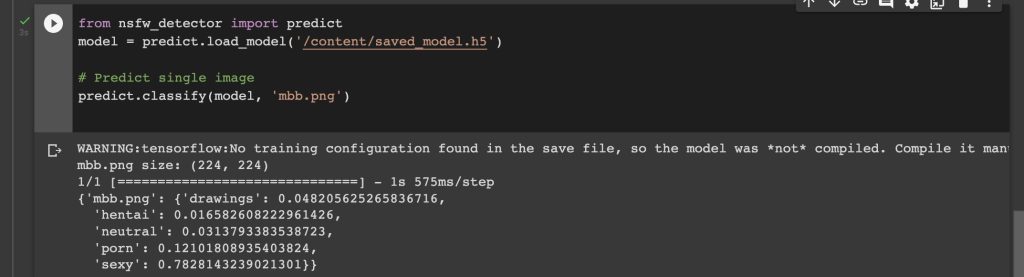

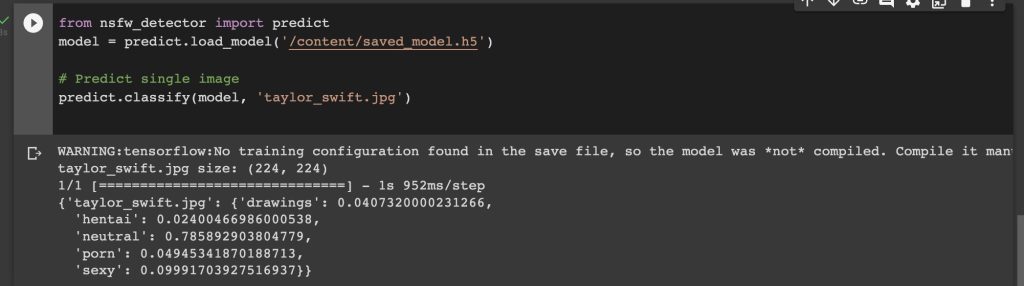

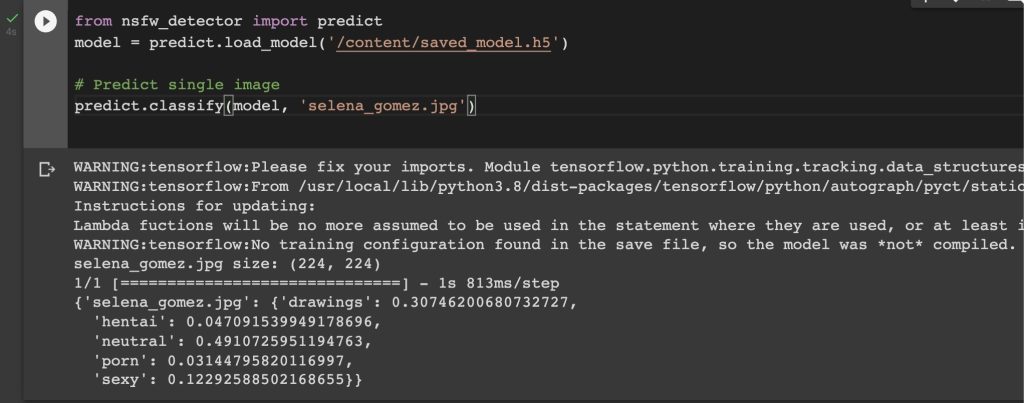

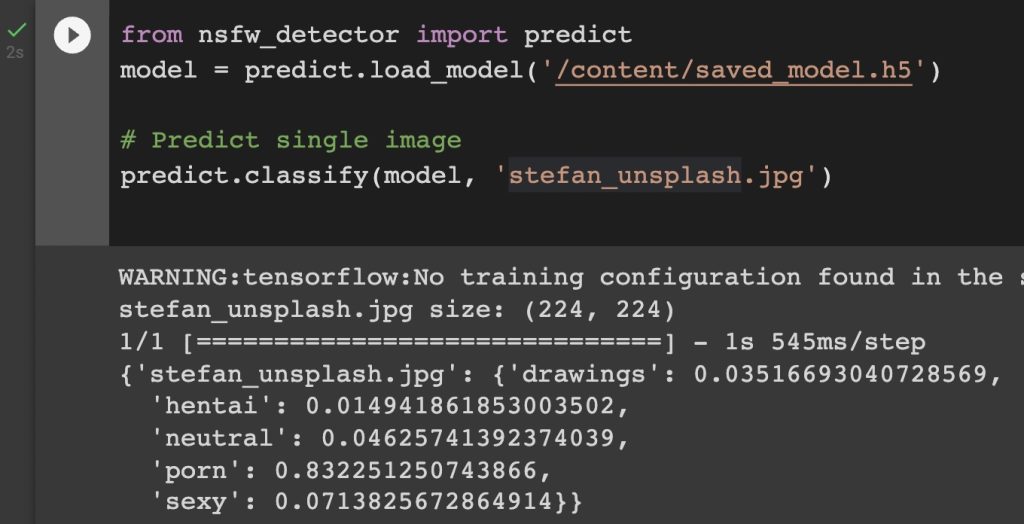

I thought you'd never ask! You did, so kudos. Um, okay, so it's simple, actually. Just import "predict" from the library above.

Then, load a model to use for the prediction of nude images. I downloaded this one.

from nsfw_detector import predict

model = predict.load_model('/content/saved_model.h5')

# Predict single image

predict.classify(model, 'selena_gomez.jpg')After that, use the classify function, passing in the model and an image. You can also do batch nude image recognition by adding more after a comma. The sky... is the limit.

Output:

Wow! So none of the non-nude images are actually classified as NSFW images by this model. As you can see below, the Selena Gomez image that has been falsely marked as a nude image by the other two models is mostly marked as a neutral image, though it falsely adds 30% of the drawing into the mix too. The Millie Bobby picture has a high probability of being "sexy"-- nearly 80%, which do you agree with? Make your decision and let me know. The last image, Taylor Swift's, is given a high neutral probability.

Finally, I used an NSFW photo to test the model's capability to distinguish true nude images, which it marks in the "porn" category. The results are positive, which indicates the model does work. Moreover, I ran multiple tests, and the results were still accurate.

Okay... Sherlock, but How Can I Tell Deepfakes, usually Nude Images, apart from Real Images?

Gone are the days of easily knowing if an image is altered or real. I mean, do you know about Midjourney? Or ChatGPT?

As a viewer, it can be difficult to distinguish real images from deep fakes. However, there are a few ways to spot potential deep fakes:

- Look for signs of manipulation: Check for unnatural movements or expressions in the image or video, such as blinking eyes that don't match the rest of the face or lips that don't move in sync with the audio.

- Check the source: Be cautious of images or videos that come from untrusted sources or that are shared on social media without any verification.

- Use tools: There are tools available that can help detect deep fakes, such as ones that analyze the image or video for signs of manipulation or compare it to a database of known deep fakes.

- Ask for proof: If you are still unsure about the authenticity of an image or video, ask for additional proof, such as a photo or video taken from another angle or a statement from the person who appears in the image or video.

It's important to note that image recognition technology is constantly evolving, and deep fake technology is improving. So, it's key to stay informed and aware of the latest techniques for the recognition of images and tools to detect deep fakes and be prepared to question the authenticity of any image or video.

Library Requirements:

- Tensorflow

- Numpy

- Pillow

import tensorflow as tf

from tensorflow import keras

import numpy as np

from PIL import Image

# Load the pre-trained model

model = keras.models.load_model('deepfake_detection_model.h5')

def is_deepfake(img_path):

# Load the input image

img = Image.open(img_path)

img = img.resize((224,224))

# Pre-process the input image

input_data = np.array(img) / 255.0

input_data = np.expand_dims(input_data, axis=0)

# Make predictions with the model

predictions = model.predict(input_data)

# Convert the predictions to binary labels (real/deepfake)

# (the threshold for converting predictions to binary labels will depend on the specific model and task)

labels = (predictions > 0.5).astype(int)

if labels[0][0] == 0:

return "Real"

else:

return "Deepfake"

After importing the libraries, you need to load a pre-trained model and add the path. Then, load and preprocess an image you want to make predictions on and use the predict function to find results. Finally, the results are converted to binary values to demarcate real or deep fake images. In the end, a conditional statement leads to the final result.

Weren't You Going to Tell Me about Cloud Storage Moderation, though...

The classification of NSFW (Not Safe For Work) images is crucial for cloud storage providers (CSP) due to its impact on the overall user experience and legal obligations. Using moderation, you can ensure a secure environment. How? By detecting and flagging any explicit or NSFW content that might violate the provider's terms of service. As such, NiHao Cloud takes this very seriously. It's something it works to improve on every day.

Prohibiting the storage and sharing of NSFW images, such as pornography, hate speech, and graphic violence, is crucial for CSPs to maintain a positive reputation and brand image. It also minimizes potential legal and financial risks. But how does this relate? Storing such NSFW images is generally illegal and criminal in many places.

Do you know how many criminals use cloud storage to hoard illegal content? Most CSPs want to limit this, not just because of negative press but also the implications. This is practically one of the main reasons to work on flagging such content.

Moreover, the automatic identification of NSFW images through image classification technology protects the provider and provides users with a safe place for storing and sharing their files. This can also help foster trust among users and encourage more widespread adoption of cloud storage services. This is, ultimately, the goal of all cloud services. Isn't it?

Lastly, image classification of nude or illegal images is a critical aspect of cloud storage management. It also plays a vital role in preserving a safe and responsible online environment.

Conclusion: Why Classification of Nude Images is Essential

It is truly amazing what technology can do today. With the latest tech like ChatGPT, Whisper AI, Midjourney, and other AI tools. It has become a necessity to classify images based on NSFW categorizations for moderation. Why? Everyone has access to social media and cloud storage services. This means people set up niches for NSFW, usually illegal, images or media content. If not flagged, the service provider also becomes liable for criminal negligence. I mean, how often do you come across something NSFW without consent? Let alone the times criminals use cloud storage for hoarding illegal data.

Moreover, there has been a revolution in the field of image processing with the prevalent use of deep fakes, which makes integrity challenging. As such, it has become increasingly essential to develop models that can find real images from edits. One essential requirement for this is efficiency. You need a model that works 9.9 times out of 10. Not on which does the same work 6 out of 10 times.

Thank you for reading this post on nude images. I hope you like it. Please let me know below in the comments what you think. This post took a longer time than I expected -- M1 MacBooks are not compatible with most Tensorflow-based models. And just not great at image analysis.

You would probably also enjoy a few related posts. Check out Cybersecurity Jobs in 2023 and User Authentication in Python.

Written by: Syed Umar Bukhari.